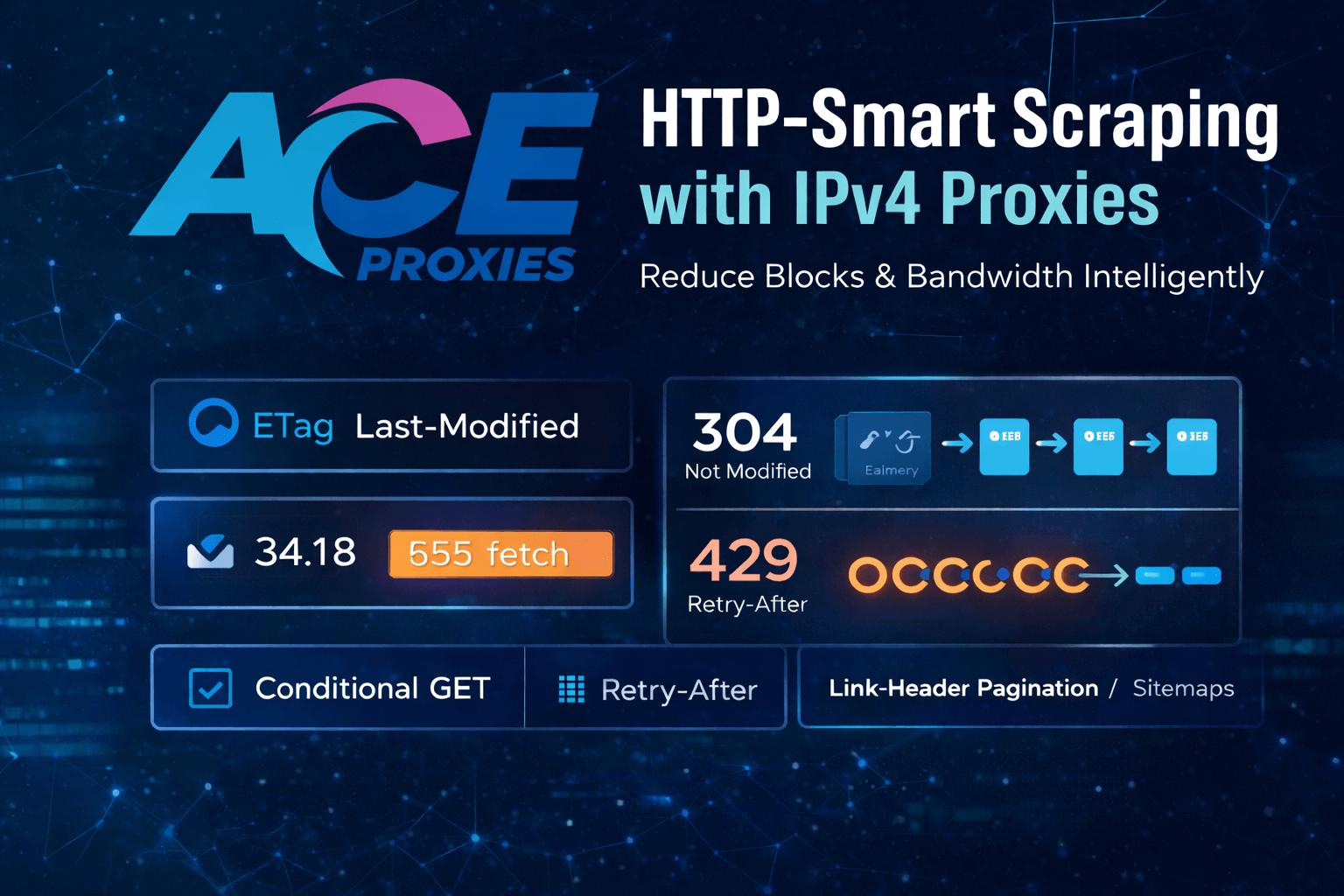

HTTP-Smart Scraping with IPv4 Proxies

HTTP-Smart Scraping with IPv4 Proxies

Conditional Requests, Caching, and Pagination That Reduce Blocks

Most scraping guides focus on rotating more IPs or endlessly tweaking headers. That approach works, until it doesn’t. The largest efficiency gains many teams miss have little to do with proxies at all. They come from using HTTP the way it was designed to be used.

By applying conditional requests, proper caching semantics, pagination contracts, and respectful rate-limit handling, you can significantly reduce bandwidth, lower detection risk, and stabilize performance using plain IPv4 proxies.

This guide focuses on HTTP-first scraping, not browser emulation. The techniques below are grounded in HTTP standards and are practical for real-world production pipelines.

The One-Line Strategy: Don’t Fetch What Hasn’t Changed

HTTP includes built-in mechanisms that allow clients to avoid unnecessary downloads:

- ETag: a version identifier for a resource

- Last-Modified: a timestamp indicating when the resource last changed

- If-None-Match / If-Modified-Since: conditional request headers

- 304 Not Modified: confirmation that cached content is still valid

When used correctly, these mechanisms dramatically reduce full content downloads. Fewer large responses mean less suspicious traffic and lower infrastructure costs.

In practice, this makes your scraper behave like a normal client revalidating content, rather than repeatedly re-downloading the same pages.

Conditional GETs: A Practical Playbook

- Prime once — Fetch the resource normally and capture the ETag and or Last-Modified headers.

- Store validators per URL — Persist these values alongside request metadata.

- Revalidate on subsequent runs — Send If-None-Match with the stored ETag, or If-Modified-Since when only a timestamp is available.

- Handle 304 responses correctly — A 304 Not Modified response means the cached content can be reused without downloading the body again.

- Fallback when validators are missing — If neither header exists, rely on longer recheck intervals or sitemap-guided scheduling.

- Avoid HEAD-only loops — Some servers do not return validators on HEAD requests. Conditional GETs are more reliable.

- Preserve ETag formatting — Keep quotes and weak validators exactly as received.

- Measure the outcome — Track the 304 rate and bytes saved per route.

Teams often see lower average payload sizes and steadier latency without changing their IP mix.

Pagination Without a Browser

Many APIs and HTML endpoints expose pagination through the Link header:

Link: <https://example.com?page=2>; rel="next"This method is standardized and allows deterministic traversal without relying on fragile DOM selectors. Parsing rel="next" replaces guesswork with predictable crawling.

Sitemaps remain useful for discovery and scheduling. While they do not guarantee content changes, they help prioritize which URLs should be revalidated sooner.

Practical workflow:

- Fetch sitemap indexes during warm-up

- Enqueue new URLs immediately

- Delay checks for older URLs

- Use conditional GETs during revalidation

This keeps crawls lean and consistent.

Rate-Limit Discipline: Treat 429 as a Contract

A 429 Too Many Requests response is not a failure. It is a signal.

When servers include a Retry-After header, honor it. When it is missing, apply exponential backoff with jitter. This prevents retry storms and reduces the likelihood of IPs being flagged as abusive.

Best practices:

- Respect Retry-After on 429 and 503 responses

- Apply jitter to avoid synchronized retries

- Cap concurrency per origin

- Slow routes globally after repeated 429s

Quiet traffic patterns protect IPv4 reputation far more effectively than aggressive rotation.

IPv4 Performance Without Complexity

You do not need HTTP/2 or HTTP/3 to gain stability benefits. Over HTTP/1.1, persistent connections using keep-alive reduce handshake overhead and smooth pacing.

Implementation tips:

- Use small per-origin connection pools

- Reuse connections when allowed

- Respect Connection: close headers

- Avoid opening and closing sockets per request

Connection reuse often improves p95 latency and reduces retry pressure.

What to Measure Weekly

- 304 rate and bytes saved per route

- Retries per success and p95 latency

- 429 compliance and delay accuracy

- Pagination method usage, Link headers versus DOM parsing

- Sitemap accuracy versus actual change detection

Make one change per route per week and hold. Stability beats constant tuning.

Compliance Matters

Respect robots.txt. It is not informal guidance, it is standardized behavior. Treat it as an input to scheduling and route selection, not something to ignore.

Lower noise and fewer unnecessary requests reduce both legal and operational risk.

When to Escalate to a Browser

Some routes genuinely require browser environments due to complex client-side checks. When that happens, escalate intentionally and track cost per successful result.

Once stable, de-escalate back to HTTP-first workflows whenever possible. Most public pages do not require full browser automation.

Key Takeaways

- Use conditional GETs wherever possible

- Let Link headers and sitemaps guide traversal

- Honor 429 Retry-After signals

- Reuse connections with keep-alive

- Measure outcomes, not IP counts

Recommended lanes:

- High-volume public pages: Datacenter IPv4 proxies

- Geographic distribution: Rotating Residential proxies

- Session continuity: Static Residential (ISP) proxies

Next Steps

If you want scraping performance without unnecessary complexity, start with HTTP semantics before adding more IPs or heavier tooling.

Scrape with Confidence

Explore the full Ace Proxies product suite.